Agricultural electricity demand is highly sensitive to water availability. Under “normal” conditions, the State Water Project (SWP) and Central Valley Project (CVP), as well as other surface water supplies, are key sources of irrigation water for many California farmers. Under dry conditions, these water sources can be sharply curtailed, even eliminated, at the same time irrigation requirements are heightened. Farmers then must rely more heavily on groundwater, which requires greater energy to pump than surface water, since groundwater must be lifted from deeper depths.

Over extended droughts, like between 2012 to 2016, groundwater levels decline, and must be pumped from ever deeper depths, requiring even more energy to meet crops’ water needs. As a result, even as land is fallowed in response to water scarcity, significantly more energy is required to water remaining crops and livestock. Much less pumping is necessary in years with ample surface water supply, as rivers rise, soils become saturated, and aquifers recharge, raising groundwater levels.

The surface-groundwater dynamic results in significant variations in year-to-year agricultural electricity sales. Yet, PG&E has assigned the agricultural customer class a revenue responsibility based on the assumption that “normal” water conditions will prevail every year, without accounting for how inevitable variations from these circumstances will affect rates and revenues for agricultural and other customers.

This assumption results in an imbalance in revenue collection from the agricultural class that does not correct itself even over long time periods, harming agricultural customers most in drought years, when they can least afford it. Analysis presented presented by M.Cubed on behalf of the Agricultural Energy Consumers Association (AECA) in the 2017 PG&E General Rate Case (GRC) demonstrated that overcollections can be expected to exceed $170 million over two years of typical drought conditions, with the expected overcollection $34 million in a two year period. This collection imbalance also increases rate instability for other customer classes.

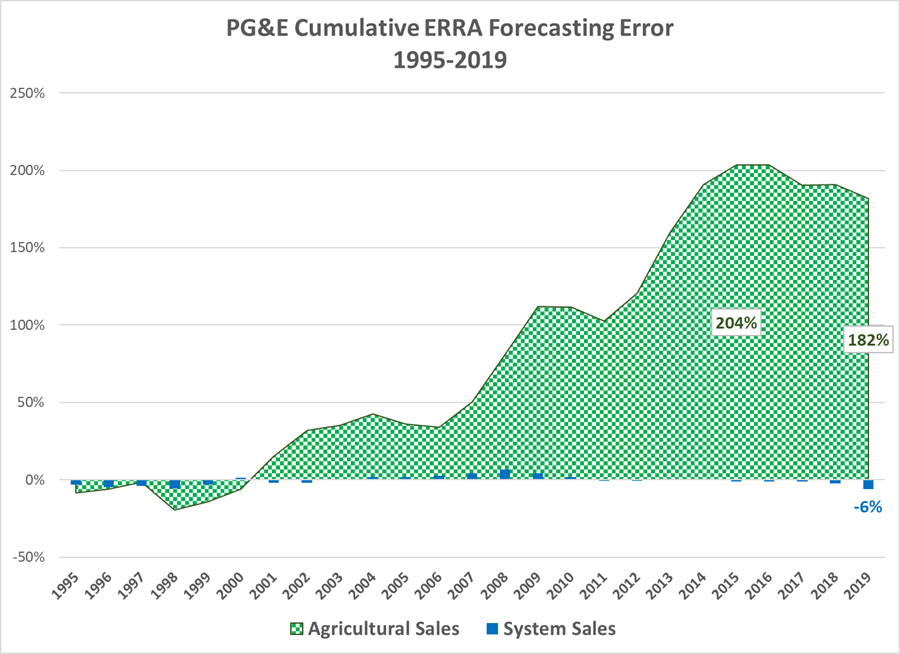

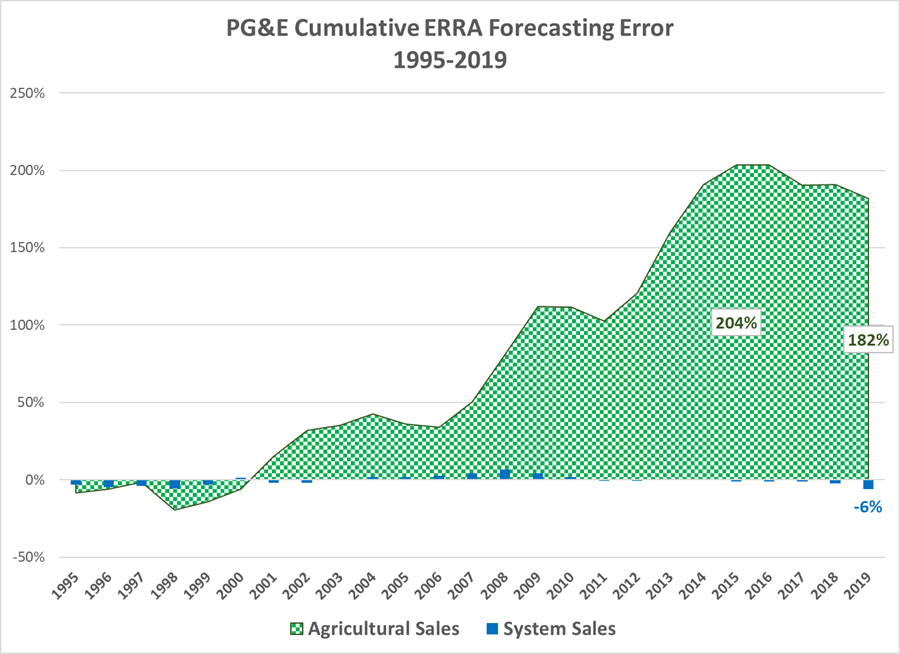

Figure-1 compares the difference between forecasted loads for agriculture and system-wide used to set rates in the annual ERRA Forecast proceedings (and in GRC Phase 2 every three years) and the actual recorded sales for 1995 to 2019. Notably, the single largest forecasting error for system-wide load was a sales overestimate of 4.5% in 2000 and a shortfall in 2019 of 3.7%, while agricultural mis-forecasts range from an under-forecast of 39.2% in the midst of an extended drought in 2013 to an over-forecast of 18.2% in one of the wettest years on record in 1998. Load volatility in the agricultural sector is extreme in comparison to other customer classes.

Figure-2 shows the cumulative error caused by inadequate treatment of agricultural load volatility over the last 25 years. An unbiased forecasting approach would reflect a cumulative error of zero over time. The error in PG&E’s system-wide forecast has largely balanced out, even though the utility’s load pattern has shifted from significant growth over the first 10 years to stagnation and even decline. PG&E apparently has been able to adapt its forecasting methods for other classes relatively well over time.

The accumulated error for agricultural sales forecasting tells a different story. Over a quarter century the cumulative error reached 182%, nearly twice the annual sales for the Agricultural class. This cumulative error has consequences for the relative share of revenue collected from agricultural customers compared to other customers, with growers significantly overpaying during the period.

Agricultural load forecasting can be revised to better address how variations in water supply availability drive agricultural load. Most importantly, the final forecast should be constructed from a weighted average of forecasted loads under normal, wet and dry conditions. The forecast of agricultural accounts also must be revamped to include these elements. In addition, the load forecast should include the influence of rates and a publicly available data source on agricultural income such as that provided by the USDA’s Economic Research Service.

The Forecast Model Can Use An Additional Drought Indicator and Forecasted Agricultural Rates to Improve Its Forecast Accuracy

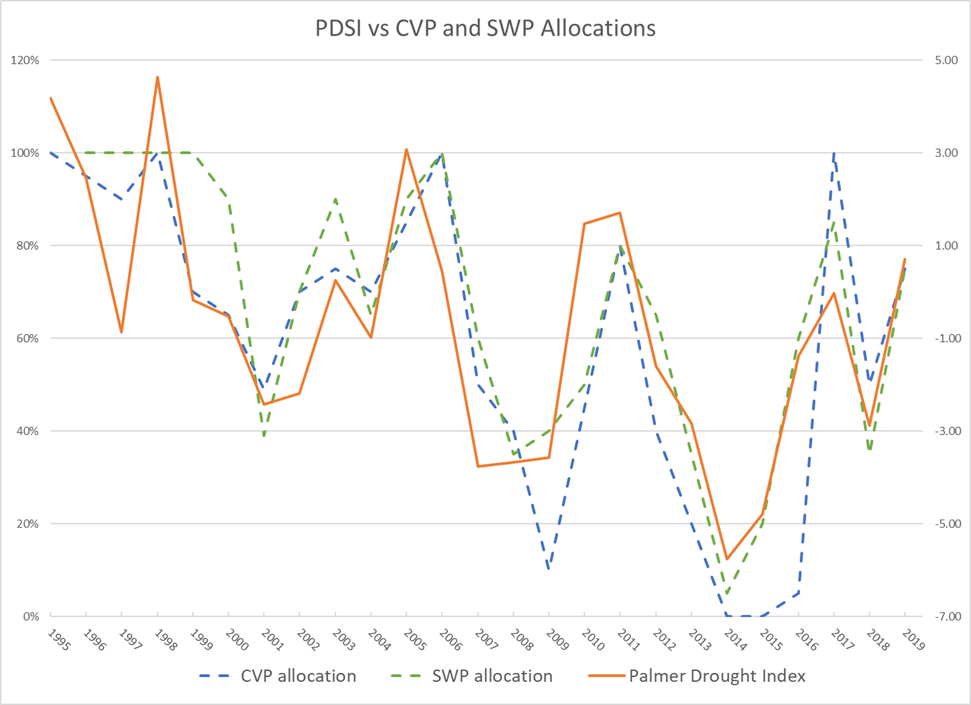

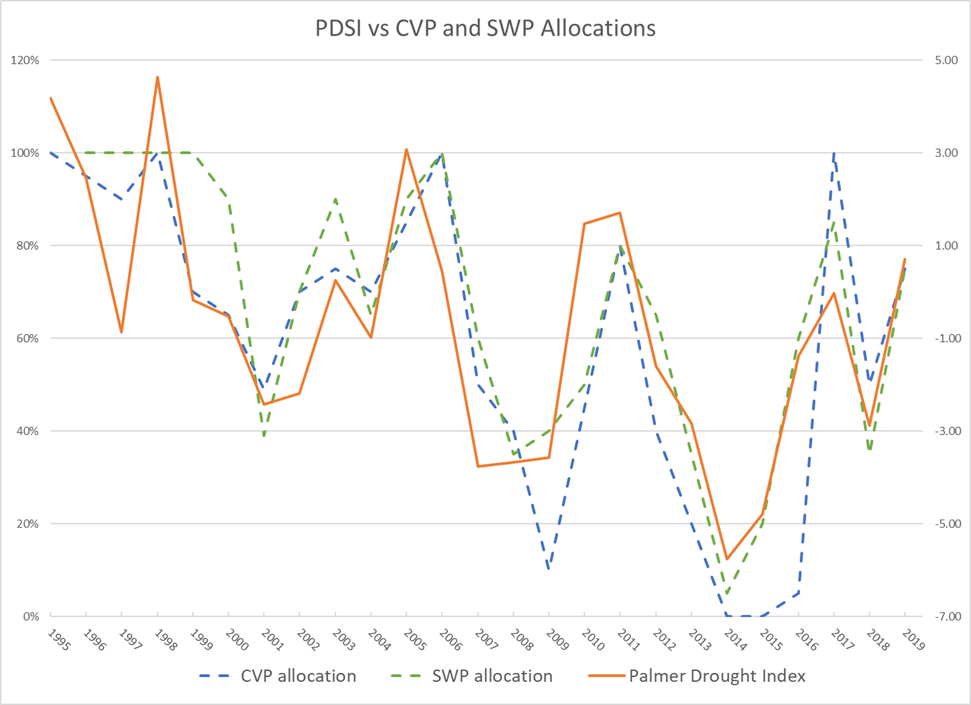

The more direct relationship to determine agricultural class energy needs is between the allocation of surface water via state and federal water projects and the need to pump groundwater when adequate surface water is not available from the SWP and federal CVP. The SWP and CVP are critical to California agriculture because little precipitation falls during the state’s Mediterranean-climate summer and snow-melt runoff must be stored and delivered via aqueducts and canals. Surface water availability, therefore, is the primary determinant of agricultural energy use, while precipitation and related factors, such as drought, are secondary causes in that they are only partially responsible for surface water availability. Other factors such as state and federal fishery protections substantially restrict water availability and project pumping operations greatly limiting surface water deliveries to San Joaquin Valley farms.

We found that the Palmer Drought Stress Index (PDSI) is highly correlated with contract allocations for deliveries through the SWP and CVP, reaching 0.78 for both of them, as shown in Figure AECA-3. (Note that the correlation between the current and lagged PDSI is only 0.34, which indicates that both variables can be included in the regression model.) Of even greater interest and relevance to PG&E’s forecasting approach, the correlation with the previous year’s PDSI and project water deliveries is almost as strong, 0.56 for the SWP and 0.53 for the CVP. This relationship can be seen also in Figure-3, as the PDSI line appears to lead changes in the project water deliveries. This strong relationship with this lagged indicator is not surprising, as both the California Department of Water Resources and U.S. Bureau of Reclamation account for remaining storage and streamflow that is a function of soil moisture and aquifers in the Sierras.

Further, comparing the inverse of water delivery allocations, (i.e., the undelivered contract shares), to the annual agricultural sales, we can see how agricultural load has risen since 1995 as the contract allocations delivered have fallen (i.e., the undelivered amount has risen) as shown in Figure-4. The decline in the contract allocations is only partially related to the amount of precipitation and runoff available. In 2017, which was among the wettest years on record, SWP Contractors only received 85% of their allocations, while the SWP provided 100% every year from 1996 to 1999. The CVP has reached a 100% allocation only once since 2006, while it regularly delivered above 90% prior to 2000. Changes in contract allocations dictated by regulatory actions are clearly a strong driver in the growth of agricultural pumping loads but an ongoing drought appears to be key here. The combination of the forecasted PDSI and the lagged PDSI of the just concluded water year can be used to capture this relationship.

Finally, a “normal” water year rarely occurs, occurring in only 20% of the last 40 years. Over time, the best representation of both surface water availability and the electrical load dependent on it is a weighted average across the probabilities of different water year conditions.

Proposed Revised Agricultural Forecast

We prepared a new agricultural load forecast for 2021 implementing the changes recommended herein. In addition, the forecasted average agricultural rate was added, which was revealed to be statistically valid. The account forecast was developed using most of the same variables as for the sales forecast to reflect similarities in drivers of both sales and accounts.

Figure-5 compares the performance of AECA’s proposed model to PG&E’s model filed in its 2021 General Rate Case. The backcasted values from the AECA model have a correlation coefficient of 0.973 with recorded values,[1] while PG&E’s sales forecast methodology only has a correlation of 0.742.[2] Unlike PG&E’s model almost all of the parameter estimates are statistically valid at the 99% confidence interval, with only summer and fall rainfall being insignificant.[3]

AECA’s accounts forecast model reflects similar performance, with a correlation of 0.976. The backcast and recorded data are compared in Figure-6. For water managers, this chart shows how new groundwater wells are driven by a combination of factors such as water conditions and electricity prices.