I coauthored this article in the Los Angeles Daily News with Ahmad Faruqui and Andy Van Horn. We critique the proposed income-graduated fixed charge (IGFC) being considered at the California Public Utilities Commission.

I coauthored this article in the Los Angeles Daily News with Ahmad Faruqui and Andy Van Horn. We critique the proposed income-graduated fixed charge (IGFC) being considered at the California Public Utilities Commission.

Meredith Fowlie wrote this blog on the proposal to drastically increase California utilities’ residential fixed charges at the Energy Institute at Haas blog. I posted this comment (with some additions and edits) in response.

First, infrastructure costs are responsive to changes in both demand and added generation. It’s just that those costs won’t change for a customer tomorrow–it will take a decade. Given how fast transmission retail rates have risen and have none of the added fixed costs listed here, the marginal cost must be substantially above the current average retail rates of 4 to 8 cents/kWh.

Further, if a customer is being charged a fixed cost for capacity that is being shared with other customers, e.g., distribution and transmission wires, they should be able to sell that capacity to other customers on a periodic basis. While many economists love auctions, the mechanism with the lowest ancillary transaction costs is a dealer market akin a grocery store which buys stocks of goods and then resells. (The New York Stock Exchange is a type of dealer market.) The most likely unit of sale would be in cents per kWh which is the same as today. In this case, the utility would be the dealer, just as today. So we are already in the same situation.

Airlines are another equally capital intensive industry. Yet no one pays a significant fixed charge (there are some membership clubs) and then just a small incremental charge for fuel and cocktails. Fares are based on a representative long run marginal cost of acquiring and maintaining the fleet. Airlines maintain a network just as utilities. Economies of scale matter in building an airline. The only difference is that utilities are able to monopolistically capture their customers and then appeal to state-sponsored regulators to impose prices.

Why are California’s utility rates 30 to 50% or more above the current direct costs of serving customers? The IOUs, and PG&E in particular, over procured renewables in the 2010-2012 period at exorbitant prices (averaging $120/MWH) in part in an attempt to block entry of CCAs. That squandered the opportunity to gain the economics benefits from learning by doing that led to the rapid decline in solar and wind prices over the next decade. In addition, PG&E refused to sell a part of its renewable PPAs to the new CCAs as they started up in the 2014-2017 period. On top of that, PG&E ratepayers paid an additional 50% on an already expensive Diablo Canyon due to the terms of the 1996 Settlement Agreement. (I made the calculations during that case for a client.) And on the T&D side, I pointed out beginning in 2010 that the utilities were overforecasting load growth and their recorded data showed stagnant loads. The peak load from 2006 was the record until 2022 and energy loads have remained largely constant, even declining over the period. The utilities finally started listening the last couple of years but all of that unneeded capital is baked into rates. All of these factors point not to the state or even the CPUC (except as an inept monitor) as being at fault, but rather to the utilities’ mismanagement.

Using Southern California Edison’s (SCE) own numbers, we can illustrate the point. SCE’s total bundled marginal costs in its rate filing are 10.50 cents per kWh for the system and 13.64 cents per kWh for residential customers. In comparison, SCE’s average system rate is 17.62 cents per kWh or 68% higher than the bundled marginal cost, and the average residential rate of 22.44 cents per kWh is 65% higher. From SCE’s workpapers, these cost increases come primarily from four sources.

An issue raised as rooftop solar spreads farther is the claim that rooftop solar customers are not paying their fair share and instead are imposing costs on other customers, who on average have lower incomes than those with rooftop solar. Yet the math behind the true rate burden for other customers is quite straightforward—if 10% of the customers are paying essentially zero (which they are actually not), the costs for the remaining 90% of the customers cannot go up more than 11% [100%/(100%-10%) = 11% ]. If low-income customers pay only 70% of this—the 11%– then their bills might go up about 8%–hardly a “substantial burden.” (70% x 11% = 7.7%)

As for aligning incentives for electrification, we proposed a more direct alternative on behalf of the Local Government Sustainable Energy Coalition where those who replace a gas appliance or furnace with an electric receive an allowance (much like the all-electric baseline) priced at marginal cost while the remainder is priced at the higher fully-loaded rate. That would reduce the incentive to exit the grid when electrifying while still rewarding those who made past energy efficiency and load reduction investments.

The solution to high rates cannot come from simple rate design; as Old Surfer Dude points out, wealthy customers are just going to exit the grid and self provide. Rate design is just rearranging the deck chairs. The CPUC tried the same thing in the late 1990s with telcom on the assumption that customers would stay put. Instead customers migrated to cell phones and dropped their land lines. The real solution is going to require some good old fashion capitalism with shareholders and associated stakeholders absorbing the costs of their mistakes and greed.

I was interviewed by a Los Angeles Times reporter about the recent power outages in Northern California as result of the wave of storms. Our power went out for 48 hours New Year’s Eve and again for 12 hours the next weekend:

After three days without power during this latest storm series, Davis resident Richard McCann said he’s seriously considering implementing his own microgrid so he doesn’t have to rely on PG&E.

“I’ve been thinking about it,” he said. McCann, whose work focuses on power sector analysis, said his home lost power for about 48 hours beginning New Year’s Eve, then lost it again after Saturday for about 12 hours.

While the storms were severe across the state, McCann said Davis did not see unprecedented winds or flooding, adding to his concerns about the grid’s reliability.

He said he would like to see California’s utilities “distributing the system, so people can be more independent.”

“I think that’s probably a better solution rather than trying to build up stronger and stronger walls around a centralized grid,” McCann said.

Several others were quoted in the article offering microgrids as a solution to the ongoing challenge.

Widespread outages occurred in Woodland and Stockton despite winds not being exceptionally strong beyond recent experience. Given the widespread outages two years ago and the three “blue sky” multi hour outages we had in 2022 (and none during the September heat storm when 5,000 Davis customers lost power), I’m doubtful that PG&E is ready for what’s coming with climate change.

PG&E instead is proposing to invest up to $40 billion in the next eight years to protect service reliability for 4% of their customers via undergrounding wires in the foothills which will raise our rates up to 70% by 2030! There’s an alternative cost effective solution that would be 80% to 95% less sitting before the Public Utilities Commission but unlikely to be approved. There’s another opportunity to head off PG&E and send some of that money towards fixing our local grid coming up this summer under a new state law.

While winds have been strong, they have not been at the 99%+ range of experience that should lead to multiple catastrophic outcomes in short order. And having two major events within a week, plus the outage in December 2020 shows that these are not statistically unusual. We experienced similar fierce winds without such extended outages. Prior to 2020, Davis only experienced two extended outages in the previous two decades in 1998 and 2007. Clearly the lack of maintenance on an aging system has caught up with PG&E. PG&E should reimagine its rural undergrounding program to mitigate wildfire risk to use microgrids instead. That will free up most of the billons it plans to spend on less than 4% of its customer base to instead harden its urban grid.

M.Cubed Partner David Mitchell was interviewed for an article on rising residential water rates in California in this October 24 article:

Across the state, water utility prices are escalating faster than other “big ticket” items such as college tuition or medical costs, according to David Mitchell, an economist specializing in water.

“Cost containment is going to become an important issue for the sector in the coming years” as climate change worsens drought and water scarcity, he said.

The price of water on the Nasdaq Veles California Water Index, which is used primarily for agriculture, hit $1,028.86 for an acre-foot on Oct. 20 — a roughly 40% increase since the start of the year. An acre-foot of water, or approximately 326,000 gallons, is enough to supply three Southern California households for a year.

Mitchell said there are short- and long-term factors contributing to rising water costs.

Long-term factors include the replacement of aging infrastructure, new treatment standards, and investments in insurance, projects and storage as hedges against drought.

In the short term, however, drought restrictions play a significant role. When water use drops, urban water utilities — which mostly have fixed costs — earn less revenue. They adjust their rates to recover that revenue, either during or after the drought.

“So it’s not right now a pretty picture,” Mitchell said.

David Mitchell’s practice areas include benefit-cost analysis, regional economic impact assessment, utility rate setting and financial planning, and natural resource valuation. Mr. Mitchell has in-depth knowledge of the water supply, water quality and environmental management challenges confronting natural resource management agencies.

The recent reliability crises for the electricity markets in California and Texas ask us to reconsider the supposed lessons from the most significant extended market crisis to date– the 2000-01 California electricity crisis. I wrote a paper two decades ago, The Perfect Mess, that described the circumstances leading up to the event. There have been two other common threads about supposed lessons, but I do not accept either as being true solutions and are instead really about risk sharing once this type of crisis ensues rather than being useful for preventing similar market misfunctions. Instead, the real lesson is that load serving entities (LSEs) must be able to sign long-term agreements that are unaffected and unfettered directly or indirectly by variations in daily and hourly markets so as to eliminate incentives to manipulate those markets.

The first and most popular explanation among many economists is that consumers did not see the swings in the wholesale generation prices in the California Power Exchange (PX) and California Independent System Operator (CAISO) markets. In this rationale, if consumers had seen the large increases in costs, as much as 10-fold over the pre-crisis average, they would have reduced their usage enough to limit the gains from manipulating prices. Consumers should have shouldered the risks in the markets in this view and their cumulative creditworthiness could have ridden out the extended event.

This view is not valid for several reasons. The first and most important is that the compensation to utilities for stranded assets investment was predicated on calculating the difference between a fixed retail rate and the utilities cost of service for transmission and distribution plus the wholesale cost of power in the PX and CAISO markets. Until May 2000, that difference was always positive and the utilities were well on the way to collecting their Competition Transition Charge (CTC) in full before the end of the transition period March 31, 2002. The deal was if the utilities were going to collect their stranded investments, then consumers rates would be protected for that period. The risk of stranded asset recovery was entirely the utilities’ and both the California Public Utilities Commission in its string of decisions and the State Legislature in Assembly Bill 1890 were very clear about this assignment.

The utilities had chosen to support this approach linking asset value to ongoing short term market valuation over an upfront separation payment proposed by Commissioner Jesse Knight. The upfront payment would have enabled linking power cost variations to retail rates at the outset, but the utilities would have to accept the risk of uncertain forecasts about true market values. Instead, the utilities wanted to transfer the valuation risk to ratepayers, and in return ratepayers capped their risk at the current retail rates as of 1996. Retail customers were to be protected from undue wholesale market risk and the utilities took on that responsibility. The utilities walked into this deal willingly and as fully informed as any party.

As the transition period progressed, the utilities transferred their collected CTC revenues to their respective holding companies to be disbursed to shareholders instead of prudently them as reserves until the end of the transition period. When the crisis erupted, the utilities quickly drained what cash they had left and had to go to the credit markets. In fact, if they had retained the CTC cash, they would not have had to go the credit markets until January 2001 based on the accounts that I was tracking at the time and PG&E would not have had a basis for declaring bankruptcy.

The CTC left the market wide open to manipulation and it is unlikely that any simple changes in the PX or CAISO markets could have prevented this. I conducted an analysis for the CPUC in May 2000 as part of its review of Pacific Gas & Electric’s proposed divestiture of its hydro system based on a method developed by Catherine Wolfram in 1997. The finding was that a firm owning as little as 1,500 MW (which included most merchant generators at the time) could profitably gain from price manipulation for at least 2,700 hours in a year. The only market-based solution was for LSEs including the utilities to sign longer-term power purchase agreements (PPAs) for a significant portion (but not 100%) of the generators’ portfolios. (Jim Sweeney briefly alludes to this solution before launching to his preferred linkage of retail rates and generation costs.)

Unfortunately, State Senator Steve Peace introduced a budget trailer bill in June 2000 (as Public Utilities Code Section 355.1, since repealed) that forced the utilities to sign PPAs only through the PX which the utilities viewed as too limited and no PPAs were consummated. The utilities remained fully exposed until the California Department of Water Resources took over procurement in January 2001.

The second problem was a combination of unavailable technology and billing systems. Customers did not yet have smart meters and paper bills could lag as much as two months after initial usage. There was no real way for customers to respond in near real time to high generation market prices (even assuming that they would have been paying attention to such an obscure market). And as we saw in the Texas during Storm Uri in 2021, the only available consumer response for too many was to freeze to death.

This proposed solution is really about shifting risk from utility shareholders to ratepayers, not a realistic market solution. But as discussed above, at the core of the restructuring deal was a sharing of risk between customers and shareholders–a deal that shareholders failed to keep when they transferred all of the cash out of their utility subsidiaries. If ratepayers are going to take on the entire risk (as keeps coming up) then either authorized return should be set at the corporate bond debt rate or the utilities should just be publicly owned.

The second explanation of why the market imploded was that the decentralization created a lack of coordination in providing enough resources. In this view, the CDWR rescue in 2001 righted the ship, but the exodus of the community choice aggregators (CCAs) again threatens system integrity again. The preferred solution for the CPUC is now to reconcentrate power procurement and management with the IOUs, thus killing the remnants of restructuring and markets.

The problem is that the current construct of the PCIA exit fee similarly leaves the market open to potential manipulation. And we’ve seen how virtually unfettered procurement between 2001 and the emergence of the CCAs resulted in substantial excess costs.

The real lessons from the California energy crisis are two fold:

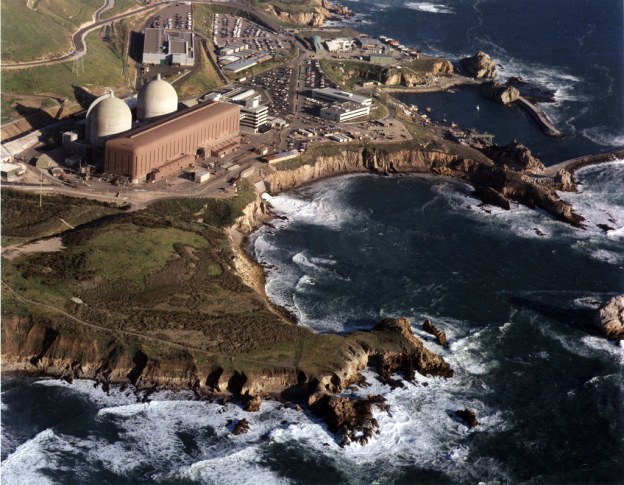

More calls for keeping Diablo Canyon have coming out in the last month, along with a proposal to match the project with a desalination project that would deliver water to somewhere. (And there has been pushback from opponents.) There are better solutions, as I have written about previously. Unfortunately, those who are now raising this issue missed the details and nuances of the debate in 2016 when the decision was made, and they are not well informed about Diablo’s situation.

One important fact is that it is not clear whether continued operation of Diablo is safe. Unit No. 1 has one of the most embrittled containment vessels in the U.S. that is at risk during a sudden shutdown event.

Another is that the decision would require overriding a State Water Resources Control Board decision that required ending the use of once-through cooling with ocean water. That cost was what led to the closure decision, which was 10 cents per kilowatt-hour at current operational levels and in excess of 12 cents in more likely operations.

So what could the state do fairly quickly for 12 cents per kWh instead? Install distributed energy resources focused on commercial and community-scale solar. These projects cost between 6 and 9 cents per kWh and avoid transmission costs of about 4 cents per kWh. They also can be paired with electric vehicles to store electricity and fuel the replacement of gasoline cars. Microgrids can mitigate wildfire risk more cost effectively than undergrounding, so we can save another $40 billion there too. Most importantly they can be built in a matter of months, much more quickly than grid-scale projects.

As for the proposal to build a desalination plant, pairing one with Diablo would both be overkill and a logistical puzzle. The Carlsbad plant produces 56,000 acre-feet annually for San Diego County Water Agency. The Central Coast where Diablo is located has a State Water Project allocation of 45,000 acre-feet which is not even used fully now. That plant uses 35 MW or 1.6% of Diablo’s output. A plant built to use all of Diablo’s output could produce 3.5 million acre-feet, but the State Water Project would need to be significantly modified to move the water either back to the Central Valley or beyond Santa Barbara to Ventura. All of that adds up to a large cost on top of what is already a costly source of water of $2,500 to $2,800 per acre-foot.

Severin Borenstein wrote another blog attacking rooftop solar (a pet peeve of his at least a decade because these weren’t being installed in “optimal” locations in the state) entitled “Myths that Solar Owners Tell Themselves.” Unfortunately he set up a number of “strawman” arguments that really have little to do with the actual issues being debated right now at the CPUC. Here’s responses to each his “myths”:

Myth #1 – Customers are paid only 4 cents per kWh for exports: He’s right in part, but then he ignores the fact that almost all of the power sent out from rooftop panels are used by their neighbors and never gets to the main part of the grid. The utility is redirecting the power down the block.

Myth #2 – The utility sells the power purchased at retail back to other customers at retail so the net so it’s a wash: Borenstein’s claim ignores the fact that when the NEM program began the utilities were buying power that cost more than the retail rate at the time. During NEM 1.0 the IOUs were paying in excess of 10c/kwh for renewable power (RPS) power purchase agreements (PPAs). Add the 4c/kWh for transmission and that’s more than the average rate of 13c/kWh that prevailed during that time. NEM 2.0 added a correction for TOU pricing (that PG&E muffled by including only the marginal generation cost difference by TOU rather than scaling) and that adjusted the price some. But those NEM customers signed up not knowing what the future retail price would be. That’s the downside of failing to provide a fixed price contract tariff option for solar customers back then. So now the IOUs are bearing the consequences of yet another bad management decision because they were in denial about what was coming.

Myth #3 – Rooftop solar is about disrupting the industry: Here Borenstein appears to be unaware of the Market Street Railway case that states that utilities are not protected from technological change. Protecting companies from the consequences of market forces is corporate socialism. If we’re going to protect shareholders from risk (and its even 100% protection), then the grid should be publicly owned instead. Sam Insull set up the regulatory scam a century ago arguing that income assurance was needed for grid investment, and when the whole scheme collapsed in the Depression, the Public Utility Holding Company Act of 1935 (PUHCA)was passed. Shareholders need to pick their poison—either be exposed to risk or transfer their assets public ownership, but wealthy shareholders should not be protected.

Myth #3A – Utilities made bad investments and should bear the risks: Borenstein is arguing since the utilities have run the con for the last decade and gotten approval from the CPUC, they should be protected. Yet I submitted testimony repeatedly starting in 2010 both PG&E’s and SCE’s GRCs that warned that they had overforecasted load growth. I was correct—statewide retail sales are about the same today as they were in 2006. Grid investment would have been much different if those companies had listened and corrected their forecasts. Further the IOUs know how to manipulate their regulatory filings to ensure that they still get their internally targeted income. Decoupling that ensures that the utility receives its guaranteed income regardless of sales further shields them. From 1994 to 2017, PG&E hit its average allowed rate of return within 0.1%. (More on this later.) A UCB economics graduate student found that the return on equity is up to 4% too high (consistent with analysis I’ve done).

Myth #3B – Time to take away the utility’s monopoly: No, we no longer need to have monopoly electric service. The same was said about telecommunications three decades ago. Now we have multiple entities vying for our dollars. The CPUC conducted a study in 1999 that was included in PG&E’s GRC proposed decision (thanks to the late Richard Bilas) that showed that economies of scale disappeared after several hundred thousand customers (and that threshold is likely lower now.) And microgrids are becoming cost effective, especially as PG&E’s rates look like they will surpass 30 cents per kWh by 2026.

Myth #4 – There aren’t barriers to the poor putting panels on their roofs: First, the barriers are largely regulatory, not financial. The CPUC has erected them to prevent aggregation of low-income customers to be able to buy into larger projects that serve these communities.

Second, there are many market mechanisms today where those with lower income are offered products or services at a higher long term price in return for low or no upfront costs. Are we also going to heavily tax car purchases because car leasing is effectively more expensive? What about house ownership vs. rentals? There are issues to address with equity, but to zero in on one small example while ignoring the much wider prevalence sets up another strawman argument.

Further, there are better ways to address the inequity in rooftop solar distribution. That inequity isn’t occurring duo to affordability but rather because of split incentives between landlords and tenants.

A much easier and more direct fix would be to modify Public Utilities Code Sections 218 to allow local sales among customers or by landlords or homeowner associations to tenants and 739.5 to allow more flexibility in pricing those sales. But allowing those changes will require that the utilities give up iron-fisted control of electricity production.

Myth #5 – Rooftop solar is the only thing that makes it cost-effective to electrify: Borenstein focuses on the what source of high rates. Rooftop solar might be raising rates, but it probably delivered as much in offsetting savings. At most those customers increased rates by 10%, but utility rates are 70-100% above the direct marginal costs of service. The sources of that difference are manifest. PG&E has filed in its 2023 GRC a projected increase in the average standard residential rate to 38 cents per kWh by 2026, and perhaps over 40 cents once undergrounding to mitigate wildfire is included. The NREL studies on microgrids show that individual home microgrids cost about 34 cents per kWh now and battery storage prices are still dropping. Exiting the grid starts to look a lot more attractive.

Maybe if we look only at the status quo as unchanging and accept all of the utilities’ claims about their “necessary” management decisions and the return required to attract investors, then these arguments might hold water. But none of these factors are true based on the empirical work presented in many forums including at the CPUC over the last decade. These beliefs are not so mythological.

Finally, Borenstein finishes with “(a)nd we all need to be open to changing our minds as a result of changing technology and new data.” Yet he has been particularly unyielding on this issue for years, and has not reexamined his own work on electricity markets from two decades ago. The meeting of open minds requires a two-way street.

Authors Ahmad Faruqui, Richard McCann and Fereidoon Sioshansi[1] respond to Professor Severin Borenstein’s much-debated proposal to reform California’s net energy metering, which was first published as a blog and later in a Los Angeles Times op-ed.

by Steven J. Moss and Richard J. McCann, M.Cubed

A potentially key barrier to decarbonizing California’s economy is escalating electricity costs.[1] To address this challenge, the Local Government Sustainable Energy Coalition, in collaboration with Santa Barbara Clean Energy, proposes to create a decarbonization incentive rate, which would enable customers who switch heating, ventilation and air conditioning (HVAC) or other appliances from natural gas, fossil methane, or propane to electricity to pay a discounted rate on the incremental electricity consumed.[2] The rate could also be offered to customers purchasing electric vehicles (EVs).

California has adopted electricity rate discounts previously to incentivize beneficial choices, such as retaining and expanding businesses in-state,[3] and converting agricultural pump engines from diesel to electricity to improve Central Valley air quality.[4]

The decarbonization incentive rate (DIR) would use the same principles as the EDR tariff. Most importantly, load created by converting from fossil fuels is new load that has only been recently—if at all–included in electricity resource and grid planning. None of this load should incur legacy costs for past generation investments or procurement nor for past distribution costs. Most significantly, this principle means that these new loads would be exempt from the power cost indifference adjustment (PCIA) stranded asset charge to recover legacy generation costs.

The California Public Utility Commission (CPUC) also ruled in 2007 that NBCs such as for public purpose programs, CARE discount funding, Department of Water Resources Bonds, and nuclear decommissioning, must be recovered in full in discounted tariffs such as the EDR rate. This proposal follows that direction and include these charges, except the PCIA as discussed above.

Costs for incremental service are best represented by the marginal costs developed by the utilities and other parties either in their General Rate Case (GRC) Phase II cases or in the CPUC’s Avoided Cost Calculator. Since the EDR is developed using analysis from the GRC, the proposed DIR is illustrated here using SCE’s 2021 GRC Phase II information as a preliminary estimate of what such a rate might look like. A more detailed analysis likely will arrive at a somewhat different set of rates, but the relationships should be similar.

For SCE, the current average delivery rate that includes distribution, transmission and NBCs is 9.03 cents per kilowatt-hour (kWh). The average for residential customers is 12.58 cents. The system-wide marginal cost for distribution is 4.57 cents per kilowatt-hour;[8] 6.82 cents per kWh for residential customers. Including transmission and NBCs, the system average rate component would be 7.02 cents per kWh, or 22% less. The residential component would be 8.41 cents or 33% less.[9]

The generation component similarly would be discounted. SCE’s average bundled generation rate is 8.59 cents per kWh and 9.87 cents for residential customers. The rates derived using marginal costs is 5.93 cents for the system average and 6.81 cent for residential, or 31% less. For CCA customers, the PCIA would be waived on the incremental portion of the load. Each CCA would calculate its marginal generation cost as it sees fit.

For bundled customers, the average rate would go from 17.62 cents per kWh to 12.95 cents, or 26.5% less. Residential rates would decrease from 22.44 cents to 15.22 cents, or 32.2% less.

Incremental loads eligible for the discounted decarb rate would be calculated based on projected energy use for the appropriate application. For appliances and HVAC systems, Southern California Gas offers line extension allowances for installing gas services based on appliance-specific estimated consumption (e.g., water heating, cooking, space conditioning).[10] Data employed for those calculations could be converted to equivalent electricity use, with an incremental use credit on a ratepayer’s bill. An alternative approach to determine incremental electricity use would be to rely on the California Energy Commission’s Title 24 building efficiency and Title 20 appliance standard assumptions, adjusted by climate zone.[11]

For EVs, the credit would be based on the average annual vehicle miles traveled in a designated region (e.g., county, city or zip code) as calculated by the California Air Resources Board for use in its EMFAC air quality model or from the Bureau of Automotive Repair (BAR) Smog Check odometer records, and the average fleet fuel consumption converted to electricity. For a car traveling 12,000 miles per year that would equate to 4,150 kWh or 345 kWh per month.

[1] CPUC, “Affordability Phase 3 En Banc,” https://www.cpuc.ca.gov/industries-and-topics/electrical-energy/affordability, February 28-March 1, 2022.

[2] Remaining electricity use after accounting for incremental consumption would be charged at the current otherwise applicable tariff (OAT).

[3] California Public Utilities Commission, Decision 96-08-025. Subsequent decisions have renewed and modified the economic development rate (EDR) for the utilities individually and collectively.

[4] D.05-06-016, creating the AG-ICE tariff for Pacific Gas & Electric and Southern California Edison.

[5] SCE, Schedules EDR-E, EDR-A and EDR-R.

[6] PG&E, Schedule AG-ICE—Agricultural Internal Combustion Engine Conversion Incentive Rate.

[7] EDR and AG-ICE were approved by the Commission in separate utility applications. The mobile home park utility system conversion program was first initiated by a Western Mobile Home Association petition by and then converted into a rulemaking, with significant revenue requirement implications.

[8] Excluding transmission and NBCs.

[9] Tiered rates pose a significant barrier to electrification and would cause the effective discount to be greater than estimated herein. The estimates above were based on measuring against the average electricity rate but added demand would be charged at the much higher Tier 2 rate. The decarb allowance could be introduced at a new Tier 0 below the current Tier 1.

[10] SCG, Rule No. 20 Gas Main Extensions, https://tariff.socalgas.com/regulatory/tariffs/tm2/pdf/20.pdf, retrieved March 2022.

[11] See https://www.energy.ca.gov/programs-and-topics/programs/building-energy-efficiency-standards;

https://www.energy.ca.gov/rules-and-regulations/building-energy-efficiency/manufacturer-certification-building-equipment;https://www.energy.ca.gov/rules-and-regulations/appliance-efficiency-regulations-title-20

In the 1990s, California’s industrial customers threatened to build their own self-generation plants and leave the utilities entirely. Escalating generation costs due to nuclear plant cost overruns and too-generous qualifying facilities (QF) contracts had driven up rates, and the technology that made QFs possible also allowed large customers to consider self generating. In response California “restructured” its utility sector to introduce competition in the generation segment and to get the utilities out of that part of the business. Unfortunately the initiative failed, in a big way, and we were left with a hybrid system which some blame for rising rates today.

Those rising rates may be introducing another threat to the utilities’ business model, but it may be more existential this time. A previous blog post described how Pacific Gas & Electric’s 2022 Wildfire Mitigation Plan Update combined with the 2023 General Rate Application could lead to a 50% rate increase from 2020 to 2026. For standard rate residential customers, the average rate could by 41.9 cents per kilowatt-hour.

For an average customer that translates to $2,200 per year per kilowatt of peak demand. Using PG&E’s cost of capital, that implies that an independent self-sufficient microgrid costing $15,250 per kilowatt could be funded from avoiding paying PG&E bills.

The National Renewable Energy Laboratory (NREL) study referenced in this blog estimates that a stand alone residential microgrid with 7 kilowatts of solar paired with a 5 kilowatt / 20 kilowatt-hour battery would cost between $35,000 and $40,000. The savings from avoiding PG&E rates could justify spending $75,000 to $105,000 on such a system, so a residential customer could save up to $70,000 by defecting from the grid. Even if NREL has underpriced and undersized this example system, that is a substantial margin.

This time it’s not just a few large customers with choice thermal demands and electricity needs—this would be a large swath of PG&E’s residential customer class. It would be the customers who are most affluent and most able to pay PG&E’s extraordinary costs. If many of these customers view this opportunity to exit favorably, the utility could truly face a death spiral that encourages even more customers to leave. Those who are left behind will demand more relief in some fashion, but those customers who already defected will not be willing to bail out the company.

In this scenario, what is PG&E’s (or Southern California Edison’s and San Diego Gas & Electric’s) exit strategy? Trying to squeeze current NEM customers likely will only accelerate exit, not stifle it. The recent two-day workshop on affordability at the CPUC avoided discussing how utility investors should share in solving this problem, treating their cost streams as inviolable. The more likely solution requires substantial restructuring of PG&E to lower its revenue requirements, including by reducing income to shareholders.

All Things Solar and Electric

Musings from M.Cubed on the environment, energy and water

This blog is not necessarily about biking. It's about life that is lived locally, at a human pace.

Energy, Environment and Policy

Musings from M.Cubed on the environment, energy and water

Examining State Authority in Interstate Electricity Markets

Musings from M.Cubed on the environment, energy and water

Musings from M.Cubed on the environment, energy and water

Economic insight and analysis from The Wall Street Journal.

Musings from M.Cubed on the environment, energy and water

Musings from M.Cubed on the environment, energy and water

A few thoughts from John Fleck, a writer of journalism and other things, living in New Mexico

Musings from M.Cubed on the environment, energy and water

Musings from M.Cubed on the environment, energy and water

Musings from M.Cubed on the environment, energy and water

Tips and tricks on programming, evolutionary algorithms, and doing research

Musings from M.Cubed on the environment, energy and water

A blog about water resources and law

Musings from M.Cubed on the environment, energy and water